Continuing on from the previous post, it’s time to take a look at the customization requirements that brought about the creation of a custom bootstrap process for our desktop installations.

The customization capabilities within the Expert product are extensive, they support changes to the domain model, business process and user interface. Many of these changes result in the need to deploy custom assemblies to the workstations running the Expert applications. If the out-of-the-box ClickOnce manifests were used to manage these changes, they would need to be updated by the customization process. Instead of doing this, we chose a solution similar to Google Chrome and created our own bootstrap mechanism to manage updating the client software. The ClickOnce infrastructure is used to ‘install an installer’. I’m not going to drill into the bootstrapper, Pete has already discussed some of the performance aspects here. Instead we’ll walk through the process of creating an MSI to replace the ClickOnce based installation.

This was not my first MSI authoring, in the past I’d been exposed to InstallShield, Wise and WiX, but I hadn’t done anything in the area for around 4-5 years. The last installer I wrote was using Windows Installer Xml (WiX) when it had just been publicly released from Microsoft and the memory was not a pleasant one. The good news is that in the intervening years, my biggest issue with WiX has been resolved – there is now good documentation and a healthy community supporting it. Rather than waiting until the end to list a couple of resources, here are the main references I used:

The first thing to note is that WiX is a free, open source toolkit that is fully supported by Microsoft. It does not ship with any Visual Studio version and must be downloaded. The current version, and the version I used, is v3.5 though there is a v3.6 RC0 available. The actual download of the bits is available from CodePlex here, the SourceForge site links to this.

There are three components in the installer:

- WiX – command line tools, Xml schemas, extensions

- Votive – a Visual Studio plug-in

- Deployment Tools Foundation (DTF) – a managed library for programming against MSIs.

In addition to the WiX Toolkit, another tool to have is Orca which is available in the Windows SDK. Orca is an editor for the MSI database format, allowing an MSI to be easily inspected and edited.

The WiX toolset is summarized concisely by the following diagram (taken from http://wix.sourceforge.net/coretoolset.html)

While it is possible to work directly from the command line, the Visual Studio integration is a compelling option. While the Extension Manager Online gallery contains a WiX download:

This did not work for me. Instead I downloaded and installed the MSI from the CodePlex site.

Votive, the Visual Studio plug-in, encourages making the installer part of your solution. It adds a number of project types to the product:

You can add the set-up project alongside the project containing the source code and maintain the whole solution together – installation should not be a last minute scramble.

Getting started is straightforward, you just add a reference in your set-up project to the project containing the application that you want to install. In our case, we want to install the bootstrapper contained in the ExpertAssistantCO project:

The set-up project is configured out of the box to generate a couple of WXS files for you based on the VS project file. The command line tool HEAT can create a WXS file from a number of different sources including a directory, VS project or an existing MSI. To enable HEAT, set the Harvest property to true and this will re-create the WXS files on each build based upon the project file. By simply adding a project reference to the set-up project you will have a MSI on the next solution compile. The intermediary files can be found by choosing to show all files in the Solution Explorer:

The obj/Debug subfolder will contain the generated wxs file and the wixobj files compiled from them. The bin\Debug subfolder is the default location for the MSI. The two files of interest are the ExpertAssisantCO.wxs, which contains the files from the referenced ExpertAssistantCO project and the Product.wxs which contains configuration information for the installation process. Building the project will invoke the WiX tooling: candle, preprocesser that transforms .wix into .wixobj, and light, processes wixobj files to create an MSI.

Of course, the out-of-the-box experience can only go so far and so the generated WXS likely needs to be augmented. Our approach was to use HEAT (via the Harvest option) to generate the initial WXS files and then the generation was disabled. The ExpertAssistantCO.wxs file was moved into the project for manual editing and the product.wxs file contains the bulk of the custom code.

The ExpertAssistantCO.wxs contains the list of files involved in the project, this includes source files, built files and documentation. Each file is wrapped in a separate Fragment and given a unique component Id:

<Fragment>

<DirectoryRef Id=”INSTALLLOCATION”>

<Component Id=”cmpExpertAssistantCOexe” Guid=”????????-????-????-????-????????????” KeyPath=”yes”>

<File Id=”filExpertAssistantCOexe” Source=”$(var.ExpertAssistantCO.TargetDir)\ExpertAssistantCO.exe” />

</Component>

</DirectoryRef>

</Fragment>

The generated code has been changed to specify an explicit GUID and to set the KeyPath attribute. The actual GUID has been replaced with ?s, the KeyPath attribute is used to determine if the component already exists – more here. The shouting INSTALLLOCATION is an example of a public property, in the MSI world public properties are declared in full uppercase. The directory the file will be installed into is declared in the product.wxs file and referenced here. The $(var.ExpertAssistantCO.TargetDir) demonstrates a pre-processor directive that allows VS solution properties to be accessed, in this case to determine the source location of the file.

Components can be grouped together to provide more manageable units, for example:

<Fragment>

<ComponentGroup Id=”ExpertAssistantCO.Binaries”>

<ComponentRef Id=”cmpExpertAssistantCOexe” />

<ComponentRef Id=”cmpICSharpCodeZipLib” />

<ComponentRef Id=”cmpAderantDeploymentClient”/>

</ComponentGroup>

</Fragment>

The generated referencedProject.wxs file contains each file declared within a component and then logical groupings for the components. The more interesting aspects of WiX belong to the product.wix file. This is where registry keys, short cuts, remove actions and other items are set-up.

On to the product.wix then and the first line:

<Wix xmlns=”http://schemas.microsoft.com/wix/2006/wi”

xmlns:util=”http://schemas.microsoft.com/wix/UtilExtension”

RequiredVersion=”3.5.0.0″>

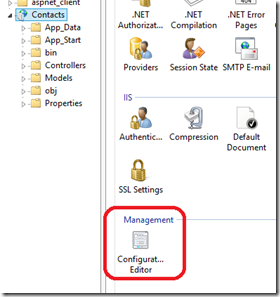

Here we are referencing two namespaces, the default namespace is the standard WiX schema and the second util namespace enables the use of a WiX extension library. Extension libraries contain additional functionality usually grouped by a common thread. The library needs to be added as a project reference:

Available extension libraries can be found in C:\Program Files (x86)\Windows Installer XML v3.5\bin :

A drill-down into the various extension schemas is provided here. The utility extension referenced above allows Xml file manipulation which we will see later. The requiredVersion sets the version of WiX we are depending on to compile the file.

Next we define the product:

<Product Id=”????????-????-????-????-????????????”

Name=”ExpertAssistant”

Language=”1033″

Codepage=”1252″

Version=”8.0.0.0″

Manufacturer=”Aderant”

UpgradeCode=”????????-????-????-????-????????????”>

The GUIDs are used to uniquely identify the product installer and so you want to ensure you create a valid GUID using tooling such as GuidGen.exe. Following the product, we define the package:

<Package InstallerVersion=”200″

Compressed=”yes”

Manufacturer=”Aderant”

Description=”Expert Assistant Installer”

InstallScope=”perUser”

/>

The attribute worth calling out here is the InstallScope. This can be set to perMachine or perUser, setting to perMachine requires elevated privileges to install. We want to offer an install to all users without requiring elevated privilege and so have a per user install. This has implications later on when we have to set-up user specific items such as Start Menu shortcuts.

Next we move onto properties:

<!– Properties –>

<Property Id=”EXPERTSHAREPATH” Value=”\\MyShare\ExpertShare” />

<Property Id=”EXPERTENVIRONMENTNAME” Value=”MyEnvironment” />

<Property Id=”EXPERTLOCALINSTALLPATH” Value=”C:\AderantExpert\” />

<Property Id=”ARPPRODUCTICON” Value=”icon.ico” />

<Property Id=”ARPNOMODIFY” Value=”1″ />

<Property Id=”WixShellExecTarget” Value=”[#filExpertAssistantCOexe]”/>

The first three properties are custom public properties defined for this installer, public properties must be declared all upper case. A public property can be provided on the msiexec command line or via an MST file to customize the property value.

The properties with a prefix of ARP relate to the Add Remove Programs control panel, now Programs and Features in Windows 7. The ARPPRODUCTICON is used to set the icon that appears in the installed programs list. The ARPNOMODIFY property removes the Change option from the control panel options:

In contrast, Visual Studio SP1 supports the Change option:

Other ARP properties can be found here.

The final property WixShellExecTarget specifies the file to be executed when the install completes. This is a required parameter of a custom action that we will come to later. The [#filExpertAssistantCOexe] is a reference to a file declared in the ExpertAssistantCO.wxs file.

<File Id=”filExpertAssistantCOexe” Source=”$(var.ExpertAssistantCO.TargetDir)\ExpertAssistantCO.exe” />

Next up we come to one of the areas that stumped me for a while, how to set a property value. Some attributes can directly reference a property by surrounding the property name in [] and have the value swapped in, e.g.

<Directory Id=”dirEnvironmentFolder” Name=”[EXPERTENVIRONMENTNAME]” >

However this is not supported when setting properties, therefore the following does not result in substitution:

<Property Id=”Composite” Value=”[PROPERTY1] and [PROPERTY2]” />

Instead the SetProperty element is used:

<!– Set-up environment specific properties–>

<SetProperty Id=”dirEnvironmentFolder” Value=”C:\AderantExpert\[EXPERTENVIRONMENTNAME]” After=”CostInitialize”/>

<SetProperty Id=”EXPERTLOCALINSTALLPATH” Value=”C:\AderantExpert\[EXPERTENVIRONMENTNAME]\Applications” After=”CostInitialize” />

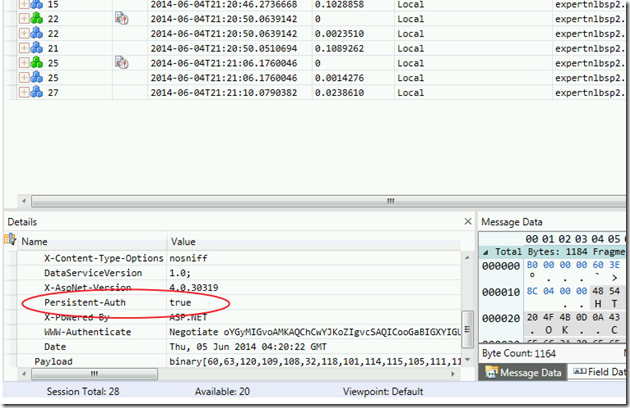

When setting the property, the appropriate time to perform the action needs to be set using either the Before or After attribute. This injects the action into the appropriate place in the list of actions to perform. To determine the order, I used Orca to view the InstallExecuteSequence:

A final property that is set is the EnableUserControl, which allows the installer to pass all public properties to the server side during a managed install.

<Property Id=”EnableUserControl” Value=”1″ />

Note: the preferred approach is to set the Secure attribute individually to Yes on each property declaration that supports user control (I have only just learnt this while writing up the posting).

A final element for this post is Icon which specifies an icon file.

<Icon Id=”icon.ico” SourceFile=”$(var.ExpertAssistantCO.TargetDir)\Expert_Assistant_Icon.ico”/>

The icon Id was used by the ARPPRODUCTICON to set the icon seen in the install programs control panel.

There’s more to come, however this post has become long enough in its own right. In the next post I’ll drill into setting registry keys, determining the install location, Start Menu settings and more.

So far we’ve walked through the following WiX code:

<?xml version=”1.0″ encoding=”UTF-8″?>

<Wix xmlns=”http://schemas.microsoft.com/wix/2006/wi” xmlns:util=”http://schemas.microsoft.com/wix/UtilExtension” RequiredVersion=”3.5.0.0″>

<Product Id=”????????-????-????-????-????????????”

Name=”ExpertAssistant”

Language=”1033″

Codepage=”1252″

Version=”8.0.0.0″

Manufacturer=”Aderant”

UpgradeCode=”????????-????-????-????-????????????”>

<Package InstallerVersion=”200″

Compressed=”yes”

Manufacturer=”Aderant”

Description=”Expert Assistant Installer”

InstallScope=”perUser”

/>

<!– Public Properties –>

<Property Id=”EXPERTSHAREPATH” Value=”\\MyShare\ExpertShare” />

<Property Id=”EXPERTENVIRONMENTNAME” Value=”MyEnvironment” />

<Property Id=”EXPERTLOCALINSTALLPATH” Value=”C:\AderantExpert\Environment\Applications” />

<Property Id=”ARPPRODUCTICON” Value=”icon.ico” />

<Property Id=”ARPNOMODIFY” Value=”1″ />

<Property Id=”WixShellExecTarget” Value=”[#filExpertAssistantCOexe]”/>

<!– Set-up environment specific properties–>

<SetProperty Id=”dirEnvironmentFolder” Value=”C:\AderantExpert\[EXPERTENVIRONMENTNAME]” After=”CostInitialize”/>

<SetProperty Id=”EXPERTLOCALINSTALLPATH” Value=”C:\AderantExpert\[EXPERTENVIRONMENTNAME]\Applications” After=”CostInitialize” />

<!– All users to access the properties, not just elevated users–>

<Property Id=”EnableUserControl” Value=”1″ />

<!– Icons –>

<Icon Id=”icon.ico” SourceFile=”$(var.ExpertAssistantCO.TargetDir)\Expert_Assistant_Icon.ico”/>